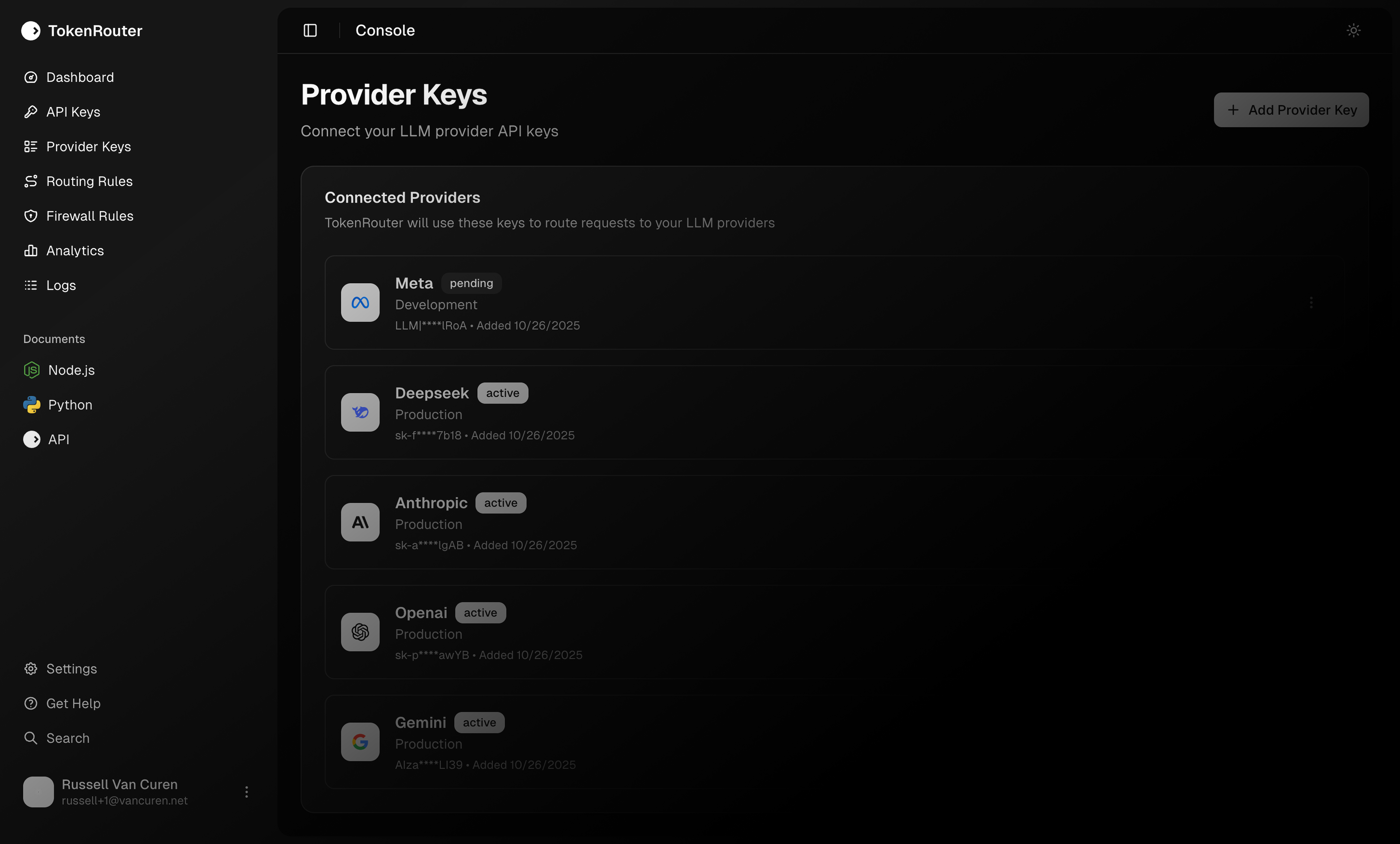

Join our community of developers saving on their AI costs every month

Join NowRoute smarter. Spend less. The compliance router for LLMs.

Ship AI fast without risking data or fines. Define rules, firewall traffic, and auto-route to approved models — with immutable audits your security team will love.

Rule Engine

Create context-based rules for model selection.

LLM Firewall

Handle inline content filtering and moderation.

Routing + Savings

Approve a preferred set of models; route by latency/cost/quality.

Audit & Analytics

Per-request trail: policies matched, redactions applied, models used.

Drop-in replacement. Zero complexity.

Replace your existing OpenAI client with TokenRouter in seconds. Same API, massive savings.

from tokenrouter import TokenRouter

client = TokenRouter(api_key="tr_...")

response = client.responses.create(

model="auto:balance",

input="How can tokenrouter.io help me?"

)

print(response.output_text)Install TokenRouter

pip install tokenrouter

Change one import

Replace openai with tokenrouter

Start saving immediately

Set model="auto" and watch the savings roll in

Operational intelligence for your LLM infrastructure.

See every token, cost, and latency metric in one intuitive console. Replace chaos with clarity. Monitor everything that matters, live. The first dashboard built for AI routing, not billing.

Quick Start

Connect providers and start routing immediately. No Vendor Lock-In. No new SDKs. No migration risk.

Routing Intelligence

Automate intelligence: choose the right model, every time. Fast queries route cheap. Complex ones get smarter models.

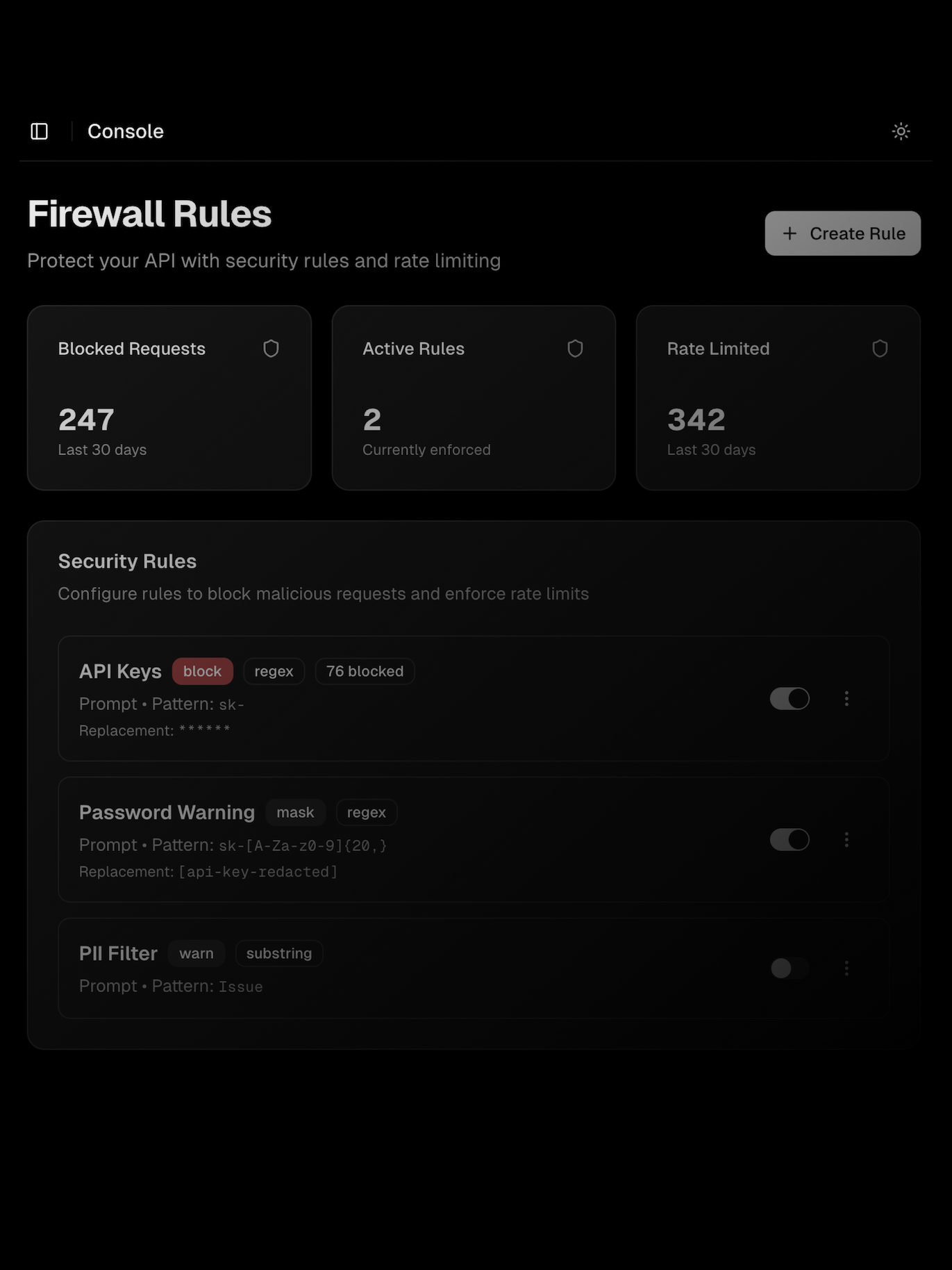

Firewall Protection

Secure everything at the routing layer — rate limits, IP filters, content safety. Protection built for AI-scale traffic.

Real-time Analytics

Visualize spend, latency, and success rate across every provider. Know exactly where your tokens go — and why.

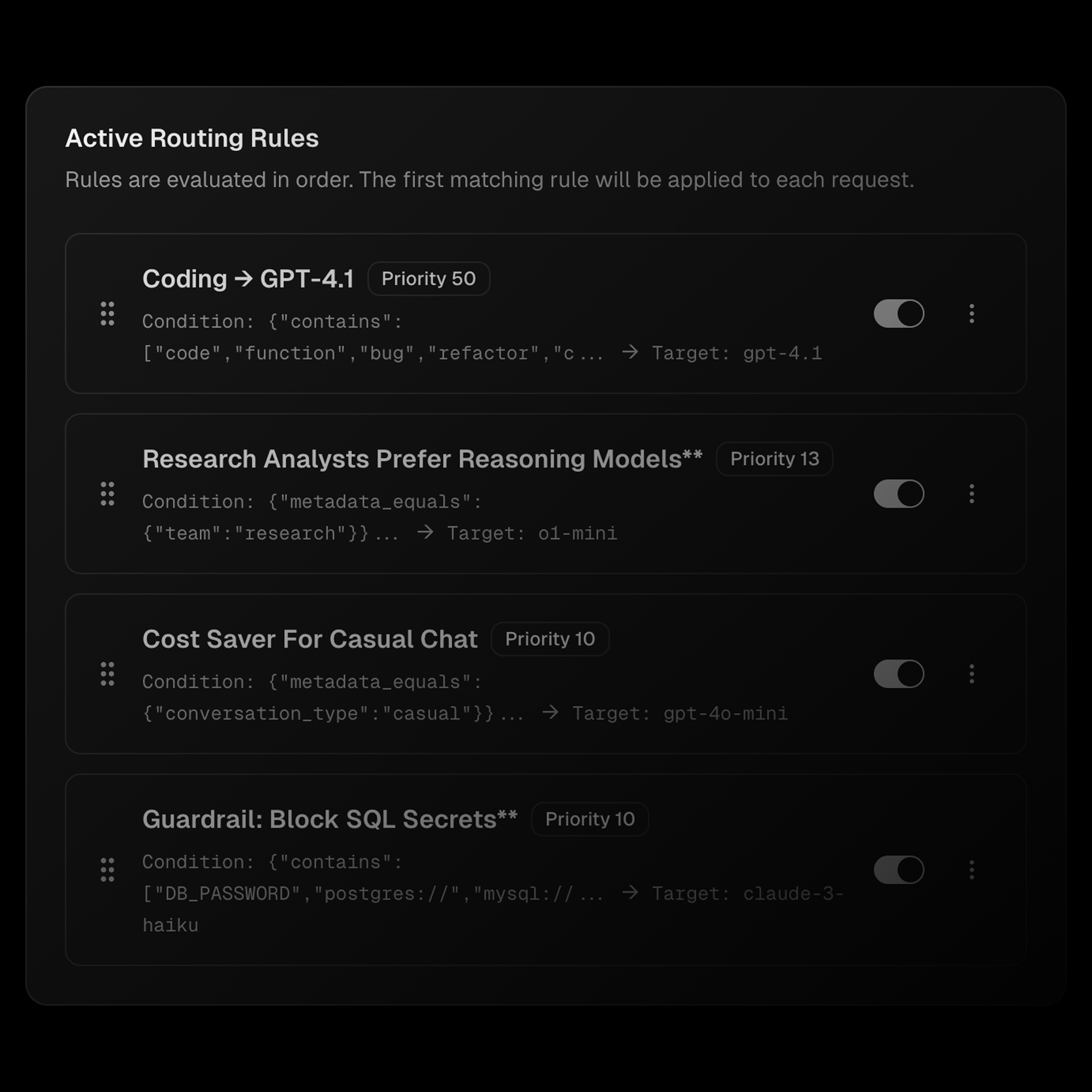

Extend your capability with rule-based routing.

Create routing logic that adapts to context, complexity, or user profile — with JSON or no-code rules.

Advanced conditional logic

Implement complex routing rules based on context, complexity, and intent.

Smart routing decisions

Route based on latency, cost, or provider health automatically.

Automated fallback

Automate fallback and safety constraints for reliability and compliance.

Build for Scaling Teams.

Firewall-grade protection for your AI API. Protect every request, auto-detect and redact API keys, PII, and credentials. Implement rate limiting, stop prompt injection, and prevent data exfiltration attacks — all built-in.

- Sensitive Data Control

- Enforce Compliance

- Geographic Restrictions

- Monitor in Real Time

Unified access to the entire LLM ecosystem.

TokenRouter connects OpenAI, Anthropic, Meta, Google, Mistral, and DeepSeek into a single intelligent layer. Add keys once, and your app instantly gains access to the best models on the planet — unified, optimized, and future-proof.

Real-time Analytics Dashboard

Monitor every request, track costs, and analyze performance metrics in real-time with our comprehensive analytics dashboard.

Intelligent routing across 40+ models

Drop-in replacement for your favorite SDKs

Integrated with your favorite providers

Connect seamlessly with OpenAI, Anthropic, Google, and more through a single unified interface.